Data description and experimental environment

In this study, the dataset was sourced from Chinese book resources on the Duxiu academic platform18. Considering that Class Z (General Books) primarily includes special publication types such as dictionaries, encyclopedias, and yearbooks, its sample size is relatively limited and suffers from frequent field-missing issues. Therefore, we ultimately selected 21 major book categories (A–X), covering complete metadata for 132,803 books, including key fields such as book titles, subject terms, abstracts, and Chinese Library Classification (CLC) codes (as shown in Fig. 2).

The primary categories in the Chinese Library Classification (CLC) system are as follows: A: Marxism, Leninism, Mao Zedong Thought, Deng Xiaoping Theory, B: Philosophy, Religion, C: Social Sciences (General), D: Politics & Law, E: Military Affairs,F: Economics, G: Culture, Science, Education, and Sports; H: Languages and Linguistics, I: Literature;J: Arts, K: History and Geography, N: General Natural Sciences, O: Mathematics, Physics, and Chemistry, P: Astronomy and Earth Sciences, Q: Biological Sciences, R: Medicine and Health Sciences, S: Agricultural Sciences, T: Industrial Technology, U: Transportation, V: Aerospace Science and Technology, and X: Environmental Science and Safety Engineering.

To ensure the reliability and reproducibility of experimental results, this study employs a stratified sampling strategy for dataset partitioning. Specifically, the StratifiedShuffleSplit method was utilized to divide the dataset into training, validation, and test sets at a ratio of 0.8:0.1:0.1, respectively. This approach effectively maintains consistent class distribution across all data subsets compared to the original dataset, thereby preventing potential class bias issues that may arise from random partitioning.

This study employs Adam as the optimizer and cross-entropy loss as the objective function, with dynamic learning rate adjustment implemented through the ReduceLROnPlateau strategy. All experiments were conducted on a workstation equipped with an Intel® Xeon(R) Gold 6248R CPU@3.00GHz\(\times\)48 processor and NVIDIA Corporation GA102GL[RTX A6000] graphics cards, running on the PyTorch framework under Ubuntu 22.04.6 LTS. The model was trained from scratch for 10 epochs with an initial learning rate of \(1\times 10^(-5)\) and a batch size of 256, incorporating early stopping. The complete training process required approximately 30 minutes. Four neural network architectures were selected as baseline models: LSTM19, TextCNN, DPCNN20, and Transformer21. The specific configurations for each model are detailed below:

LSTM

The Long Short-Term Memory (LSTM) network represents an optimized architecture derived from conventional recurrent neural networks, specifically designed to address the vanishing and exploding gradient problems inherent in processing lengthy sequences. This model’s fundamental innovation lies in its gating mechanism, comprising input gates, forget gates, and output gates. Through this selective information retention and discarding system, the architecture achieves substantial enhancement in modeling long-range dependencies. LSTM facilitates the transmission of crucial information via cell states, enabling effective learning of long-term temporal relationships, making it particularly suitable for sequential data modeling applications including machine translation, speech recognition, and financial forecasting. Despite demonstrating superior performance in sequence modeling tasks, LSTM’s serial computation paradigm results in comparatively slower training speeds and remains constrained in processing extremely long sequences.

TextCNN

TextCNN represents a seminal application of convolutional neural networks to text classification tasks, fundamentally employing multi-scale convolutional kernels to extract local textual features. Departing from conventional fully-connected neural architectures, the model implements a four-layer structure consisting of sequential input, convolutional, pooling, and fully-connected layers. The input layer transforms lexical items into low-dimensional vector representations through embedding techniques such as Word2Vec, TF-IDF, or GloVe. The subsequent convolutional layer utilizes kernels of fixed width (corresponding to word vector dimensionality) yet variable length to capture n-gram features across multiple granularities via a sliding-window mechanism. The pooling layer performs dimensionality reduction while preserving critical features through max-pooling operations, while the final fully-connected layer synthesizes global information for classification. By adapting image processing convolution operations to textual data through localized connectivity and parameter sharing, the model achieves effective local semantic feature extraction alongside substantial parameter efficiency, demonstrating exceptional performance and computational efficacy in text classification applications.

DPCNN

The Deep Pyramid Convolutional Neural Network (DPCNN) represents an efficient architecture specifically designed for text modeling, whose core innovation lies in its hierarchical and progressive approach to capturing textual features. The model initially employs a text region embedding layer to transform traditional word-level representations into semantic units covering multi-word fragments, effectively enhancing local context modeling capabilities. The architecture features a distinctive pyramid structure that alternately stacks convolutional blocks (each containing two convolutional layers with pre-activated residual connections) and stride-2 max-pooling layers. This design maintains a constant number of feature maps while progressively expanding the receptive field, thereby establishing long-range semantic relationships in a computationally efficient manner. Notably, the structure achieves two critical advantages: (i) computational complexity remains at a constant level through gradual downsampling, and (ii) residual connections ensure stable training of deep networks. Furthermore, the model incorporates unsupervised pre-trained embeddings (e.g., Word2Vec, TF-IDF, GloVe) to further improve feature extraction accuracy. DPCNN’s technical combination achieves an optimal balance between global semantic understanding and computational efficiency in text classification tasks, as demonstrated by its performance benchmarks.

Transformer

The Transformer architecture represents a groundbreaking advancement in sequence modeling through its innovative self-attention mechanism. Departing fundamentally from recurrent architectures like RNNs and LSTMs, this model eschews sequential processing entirely, instead leveraging parallelized attention mechanisms to capture global dependencies with significantly enhanced computational efficiency. The architecture’s key innovations comprise three principal components: (i) multi-head attention mechanisms that concurrently model semantic relationships across disparate positional contexts, substantially improving feature representation; (ii) positional encoding schemes that compensate for the absence of inherent recurrence by explicitly encoding sequential order information; and (iii) position-wise feed-forward networks that apply nonlinear transformations to augment the model’s representational capacity. These architectural breakthroughs have established the Transformer as a foundational framework in both natural language processing and computer vision, demonstrating unprecedented performance in complex tasks including machine translation and generative text modeling.

Evaluation metrics

For text multi-class classification tasks, we employ a comprehensive evaluation metric system that assesses model performance from multiple perspectives to ensure objective assessment. The confusion matrix (also referred to as an error matrix or contingency table) serves as a fundamental tool for evaluating classification model performance (as shown in Table 1). The matrix comprises four key components: True Positives (TP), denoting instances where samples belonging to the positive class are correctly predicted as positive; False Negatives (FN), representing positive-class samples incorrectly predicted as negative; False Positives (FP), indicating negative-class samples erroneously predicted as positive; and True Negatives (TN), corresponding to negative-class samples accurately predicted as negative. This matrix not only provides intuitive visualization of binary classification outcomes but can also be extended to multi-class scenarios through row and column expansion. Diagonal elements quantify correct classifications per category, while off-diagonal elements reveal misclassification patterns between categories.

Precision

Precision, also referred to as positive predictive value, quantifies the proportion of true positive instances among all samples predicted as positive by the model. Generally, higher precision values indicate lower false positive rates and consequently superior classification performance.

$$\begin{aligned} Precision=\frac{TP}{TP+FP} \end{aligned}$$

(9)

Recall

Recall, alternatively termed sensitivity or true positive rate, measures a model’s capability to identify actual positive instances from all truly positive samples in the dataset. Higher recall values generally indicate fewer false negative occurrences, reflecting more comprehensive detection performance in classification tasks.

$$\begin{aligned} Recall=\frac{TP}{TP+FN} \end{aligned}$$

(10)

F1-score

The F1-score serves as a composite evaluation metric that harmonizes precision and recall through their harmonic mean. This balanced measure provides a singular performance indicator where higher values reflect more optimal equilibrium between classification accuracy and coverage, indicative of superior overall model capability.

$$\begin{aligned} F1=2\times \frac{Precision\times Recall}{Precision+Recall} \end{aligned}$$

(11)

Micro-average, Macro-average and Weighted-average

In multi-class classification tasks, three distinct averaging strategies are commonly employed for metric computation – micro-average, macro-average, and weighted-average – each exhibiting unique characteristics suitable for different data distribution scenarios. Micro-average computes global evaluation metrics by aggregating all classes’ TP, FN and FP counts, effectively treating predictions across all categories as a unified whole, which inherently biases toward majority classes. Macro-average independently calculates evaluation metrics per class before taking the arithmetic mean, equally weighting all categories regardless of sample size differences, thus demonstrating greater sensitivity to minority classes. Weighted-average computes class-weighted means using each category’s sample count as weighting factors, simultaneously considering class importance while accounting for inherent distribution imbalances.

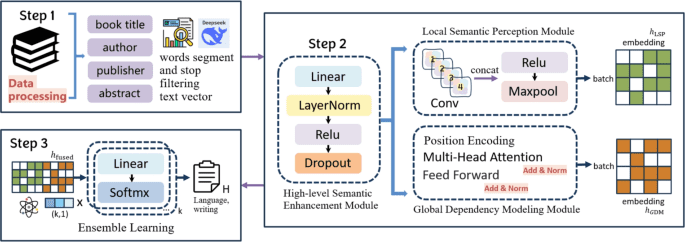

Implementation of book classification algorithm based on DeepSeek-R1-Distill model

The experiment employed precision, recall, and F1-score as evaluation metrics to comprehensively assess the model’s classification performance. Testing on the Chinese book dataset, Table 2 presents the classification results across 21 book categories, along with the corresponding test sample sizes (Support). The experimental results demonstrate outstanding performance across multiple categories, with category U achieving optimal overall performance—97.56% precision, 95.64% recall, and 96.59% F1-score. Furthermore, categories A, Q, S, and R all attained F1-scores exceeding 93%, indicating high classification accuracy and robust recognition capability for these categories.

Notwithstanding the model’s overall satisfactory performance, certain categories–particularly C and G–exhibit relatively low recall rates of 78.40% and 76.04% respectively, indicating non-negligible false negative occurrences. This phenomenon may stem from either imbalanced data distributions or high inter-class feature similarity. Notably, category K demonstrates the lowest F1-score (79.21%) among all 21 categories, suggesting particular need for algorithmic refinement. Potential improvements could involve implementing more sophisticated feature extraction techniques or data augmentation strategies to enhance performance on these specific classes. Overall, the experimental results show that this model has good practicability and generalization ability in the task of Chinese book classification.

Comparative analysis of neural network architectures

Four well-established baseline models: LSTM, TextCNN, DPCNN, and Transformer, were implemented with DeepSeek embeddings to provide comparative benchmarks. The experimental results, detailed in Table 3, demonstrate measurable performance differences across all evaluation protocols:

Under micro-averaged metrics, LSTM and TextCNN demonstrated comparable performance (71.90% vs 71.27%), indicating similar capability between conventional CNN and RNN architectures for this task. DPCNN achieved superior results (73.96%), validating its enhanced capacity for capturing long-range textual dependencies through deep pyramid structures. The Transformer further improved performance (75.91%), confirming the advantages of self-attention mechanisms in text classification. In contrast, our proposed model consistently outperformed all baselines, thereby validating the effectiveness of the multi-level feature extraction and ensemble classification framework.

Ablation experiment

We used TF-IDF vectorization (sklearn.feature_extraction.text.TfidfVectorizer) as the conventional machine learning benchmark for comparison with our method. As shown in Fig. 3, our proposed method outperforms traditional machine learning embeddings (Ours_Trad) across all evaluation metrics. It demonstrates stronger feature extraction capabilities, indicating that using LLMs (Deepseek) provides superior semantic encoding performance.

Then, we evaluated the model performance using the Local Semantic Perception Module (local) and the Global Dependency Modeling Module (global) independently. As illustrated in Fig. 4, the results demonstrate that employing both modules together achieves the best performance. This confirms that the joint architecture effectively combines fine-grained local semantic extraction with comprehensive global context modeling, resulting in more accurate and robust classification.

Furthermore, three conventional classifiers: K-Nearest Neighbors (KNN)22, Random Forest (RF)23, and Support Vector Machine (SVM)24, were implemented using DeepSeek embeddings to establish comparative benchmarks against our proposed Feature Fusion And Integrated Classification approach. As illustrated in Fig. 5, KNN and RF exhibited the lowest performance, with average metrics around 65%. SVM achieved the second-highest performance, while our proposed method delivered the best results, surpassing all baseline models by a significant margin and demonstrating the effectiveness of integrating multi-level features with ensemble learning for enhanced classification accuracy.

Hyperparameter analysis for book classification algorithm

This study systematically investigates the configuration of batch size and learning rate hyperparameters for book classification algorithms through comprehensive experiments. Moderately-sized batches demonstrate dual advantages in maintaining training stability while effectively capturing diverse data characteristics. In batch size experiments (Table 4), model performance follows a unimodal pattern, peaking at batch size=256 with optimal results. Notably, performance variation remains within 2.5% across different batch sizes, indicating the algorithm’s strong robustness to this parameter. Learning rate experiments (Table 5) reveal superior performance at \(1\times 10^{-5}\), marginally outperforming the suboptimal \(2\times 10^{-5}\) configuration. However, settings of \(1\times 10^{-3}\) and \(2\times 10^{-4}\) result in near-complete training divergence. Further analysis establishes that excessively small learning rates not only decelerate training but also predispose the model to high-error local optima, while oversized rates degrade model performance. Based on empirical evidence, the final optimal configuration selects batch size=256 and learning rate=\(1\times 10^{-5}\).