Researchers with the University of Maryland are turning a dog they nicknamed Spot into a robot that can assess patients at mass casualty scenes.

At a scene where there’s more victims than medics, whether it’s a crime scene, the scene of accident or on a battlefield, the future of that initial screening could be conducted by a robotic dog made by Boston Dynamics.

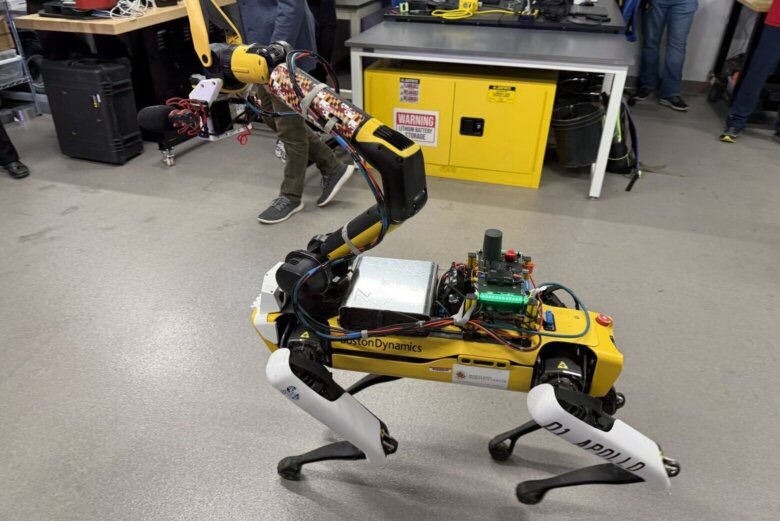

Researchers with the University of Maryland, in cooperation with the Defense Advanced Research Projects Agency, are turning a robot dog they nicknamed Spot into a first responder that can talk to and assess patients, and work with medics to make sure whoever needs the most serious amount of help can get it fast.

“I’m here to help,” the robot says as it approaches a mannequin that, at least in this demo, had suffered a gunshot wound to the leg. “Can you tell me what happened?”

The computer on the dog includes a large language model artificial intelligence system, similar to ChatGPT, that can communicate with the patient.

“We buy pretty much the heaviest computer that it could carry, and we put it on there,” said Derek Paley, a professor in Maryland’s Department of Aerospace Engineering and the Institute for Systems Research. “We also add a lot of sensors to the arm here. These are the sensors that are used to assess a patient’s injuries.”

It all works together to determine someone’s condition.

“The depth camera can create a 3D image of the casualty, and each of these sensing modalities are fused in what we call an ‘inference engine,’ so that accumulates evidence to support the assessments that are shown here. So each assessment may be determined by combining information from multiple robots, multiple sensors and multiple sensor-processing algorithms,” Paley said.

At the very beginning of the response to the incident, an aerial drone will assess the situation on the ground, mapping out where potential victims are and sending that information to both Spot and medics on scene. Spot can then scour the area to get a closer look with all its cameras and sensors.

“The robots can explore, they can assess the number of casualties, where they’re all located, and actually provide that information to the medic in real time on a phone that’s attached to the medic’s chest,” Paley said. “So the medic can look down at their chest and see pins on a map where all the casualties are, color coded by the severity of injuries.”

The robots are all doing it autonomously, too.

“They build a mosaic of images in a map to show where the casualties are, and then the ground robots, the Spots here, go to each casualty and they get things like vital signs and other assessments that the drones can’t perform,” Paley said. “That’s all preloaded onto the medic’s phone, so they have that information when they get to each casualty. They already know what the robot has assessed.”

Spot can even call out for a medic urgently if it determines a patient has critical injuries by shouting, “Medic, medic!”

All of this is still in the testing phase right now; but Paley thinks the technology could be deployable within the next couple years.

“We’re able to provide valuable assessments to the medics while they’re under pressure to provide those interventions in timely fashion,” he said.

Get breaking news and daily headlines delivered to your email inbox by signing up here.

© 2025 WTOP. All Rights Reserved. This website is not intended for users located within the European Economic Area.